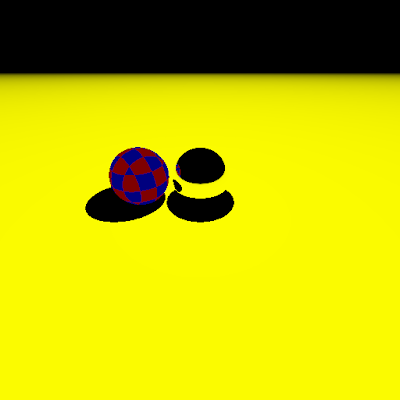

I would definitely agree that someone can sit down and bust out a simple ray tracer. When I was learning python, I hacked a 500-line script to render a simple scene. It has shadows, reflective materials, and a couple of primitives that would have to be discretized into triangles to render with a rasterizer. The only real library I used was an image library to actually put the image into a window.

- For handling reflections, I just reflect the ray direction off the surface and send it out again

- When shading something, I fire a ray towards the light source and if I hit something before I get there, I shadow the surface

- I added a procedural checker material with only a few lines of code based on the surface position

In my opinion, it's some pretty simple code to implement these rather significant features. If I wanted to add more complicated features like shade trees, it would have been a relatively simple addition. Not only was this script straight forward, but I implemented it right off the top of my head. I don't have the best of memory, so I'd like to reinforce these concepts are quite intuitive to remember.

Simple python ray tracer featuring procedural shading, Lambertian and reflective materials, and simple shadows

(source code)

Same work with a rasterizer

Now, let's imagine that I had to write the same kind of functionality in a simple scanline rasterizer.

- the procedural geometry is out--both the plane and the sphere have to be broken up into triangles, which isn't complicated, but surely not as elegant as some vector algebra

- I would need to implement or find a matrix library to handle all my projections, and since I don't have the projection matrix memorized, I'd need to go get a reference of that

- I would also need to implement the actual scanline algorithm to sweep through these polygons and implement a simple depth map

- For shadows, I'd need to render a shadow map making sure the objects casting the shadows are in the light frustum

- For reflections, I'd have to render an environment map, which would involve texture lookups and alignments, etc.

- The procedural checker material would probably be the easiest thing to implement, but I would have to interpolate the vertex attributes to the different pixels

I certainly can't elegantly fit all that in under 500 lines of code. It's pretty evident that implementing a few basic visual effects is obviously much simpler with ray tracing.

Where is Rasterization Simpler?

Ray tracing models light transport in a very physically-realistic manner. It handles pretty much any "effect" rasterization can do, but it comes at a big cost--mostly performance. Most ray tracing research currently is all about making the algorithm faster, which requires some very complex code to be written.

First, ray tracing pretty much requires the entire the scene including textures to fit into local memory. For example, if you fire a ray into the scene above and it hits the ball, that ball needs to know about the plane below it and the ball to the left of it to do a reflection. That's simple enough in this case, but what happens if your scene is five gigabytes and you have four gigabytes of RAM? You have to go through the incredibly slow process of getting that primitive from the hard drive and load it into memory. That's a ridiculously slow. And the very next ray may require another hard drive swap if it hits something not in memory.

One easy solution is to break your scene up into manageable layers and composite them, but then you have to start keeping track of what layer needs what. Like if you have a lake reflecting the far off mountains, both need to be in the same layer.

What's with these acceleration structures?

And what of these acceleration structures? Ray tracing is often touted as being able to push more geometry than rasterization as an acceleration structure typically allows the ray to prune large groups of primitives and avoid costly intersections. That's certainly nice if you happen to have one, but where do these structures come from? You build it yourself! And if you happen to be playing a game that requires any kind of deforming animation (almost any 3D game now a days), you have to rebuild these structures every frame.

Despite the research in this field, it's still expensive to maintain them as the scene changes. State of the Art in Ray Tracing Animated Scenes discusses some of the more recent techniques to speed up this process, but as Dr. Wald says, "There's no silver bullet.". You have to pick which acceleration structure to use in a given situation, and then you have to efficiently implement them.

With a rasterizer, you have to linearly go through your entire list of geometry. But if we're animating and deforming that geometry anyway, that's fine. With a ray tracer, animation means having to rebuild your acceleration structures. Why does ray tracing make animation so complicated when it should be so simple?

Ray Tracing Incoherency

Ray tracing is inherently incoherent in many ways. Firing a ray (or rays) through every pixel makes it difficult to know what object the renderer will want in the cache. For example, if one pixel hits a blade of grass, that geometry needs to be loaded into the cache. If the very next pixel is another piece of geometry, that blade may need to be swapped out again. For visibility rays you can optimize this a little bit, but with secondary rays (the entire purpose of ray tracing) these rays are going all over the place. Many elaborate schemes have been developed to attack this problem, but it's a difficult property that comes with ray tracing and won't be solved any time soon.

A rasterizer is inherently coherent. When you load a gigabyte-sized model into RAM and bring its gigabyte of textures, you render the entire scene, you're working with that one model until you need the next one. When doing a texture lookup, there's a really good chance that chunk of texture is still in your cache since the pixel before probably used an adjacent texture lookup. And when this model is completely rendered, you can throw it away and move onto the next one and let the depth buffer take care of the overlaps. Doesn't the depth buffer rock?

Both are Complex

In general, there's nothing really simple about production-quality ray tracing. It basically trades "graphics hacks" for system optimization hacks. David Luebke said in Siggraph 2008, "Rasterization is fast, but needs cleverness to support complex visual effects. Ray tracing supports complex visual effects, but needs cleverness to be fast". Every scene is bottlenecked by time and trying to get ray tracing working in that time isn't always "simple".

nVidia's Interactive Ray Tracing with CUDA presentation